I started the new year troubleshooting Docker Overlay network traffic pushed through a Cisco ACI fabric that was not working despite physical connectivity and contracts being in place. Or so we thought... as VXLAN encapsulated packets (used by Docker overlays) do not follow the usual expected pattern.

The setup

The overall picture is of a Docker Swarm with machines in two separate data centres each served by its own Cisco ACI fabric. The swarm uses overlay networks (check out my post on Docker Networking for more details) to interconnect containers running on multiple machines for the same service and these overlays encapsulate packets in VXLAN for identification/segmentation.

Now, the above is unnecessarily complicated for the purposes of this post as, in the end, we can massively simplify the challenge to this: sending VXLAN encapsulated traffic bidirectionally between 2 different EPGs (Endpoint Groups) on an ACI fabric.

VXLAN packets

This is where it gets interesting. VXLAN encapsulates packets in additional IP/UDP headers... and it is based on these that any basic network level filtering/policy will be applied. The added UDP header is particularly interesting: the destination port will always be 4789 and the source port quasi-random (or calculated as a hash of the original packet headers to help with load-balancing distribution).

It is not immediately obvious that VXLAN traffic is unidirectional and stateless in nature. Its main goal is to take a packet belonging to an L2 domain from point A to point B (the tunnel endpoints) over an L3 network... the fact that the carried packet may be part of a bidirectional flow is irrelevant.

So if the tunneled traffic is a ping between a host behind A and one behind B, then the echo request will be 1 tunneled packet from A to B and the echo reply will be a different tunneled packet from B to A. While logically we know they're part of the same flow (same applies for a TCP connection for example), from the point of view of the VXLAN encapsulation they are independent.

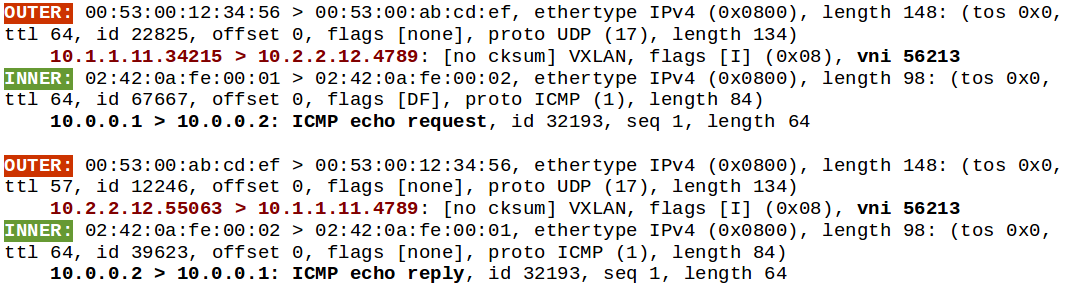

Here, 10.1.1.11 and 10.2.2.12 are tunnel endpoints (docker hosts), carrying packets sent between 2 containers (10.0.0.1 and 10.0.0.2) on the same overlay.

As you can see in the packet capture above, the two ICMP packets are encapsulated independently of the flow. Sure, they share the same VNID (necessary to identify both packets as part of the same overlay/tunnel) but look closely at the outer UDP header ports.

Yep, that destination port is always 4789 regardless of the direction.

VXLAN and ACI Contracts

ACI Contracts contain filters which specify various parts of the L3/L4 headers to inspect. The outer ones that is, as there's no concept of tunnels or nested headers. So in the case of VXLAN packets, it will look at the outer headers and be completely oblivious (as expected) to the tunneled traffic inside.

The trouble begins because these contracts make a very important assumption: traffic follows the client/server model. In ACI terminology, it's consumer/provider, but essentially the same thing.

So for bidirectional communication, a contract will allow the well known pattern, that is packets to the defined destination port with a random source high port. Because it's bidirectional, the return traffic will be allowed with the same port numbers.

But for VXLAN traffic, this pattern is broken (as you can see above), because there is no bidirectional traffic - at best there are two independent unidirectional flows, one for A->B and another for B->A.

The solutions

These are the two ways I could come up with to make this work in ACI - if there are other solutions please get in touch (or use the comments below).

First one is to use a pair of unidirectional contracts, which sounds the most natural. You create a contract that allows A->B:4789 and another that allows B->A:4789, making them unidirectional by disabling the "Reverse Filter Ports" option. You need 2 contracts because the consumers and providers swap places!

The second solution is to add two filters to one contract A->B with "Reverse Filter Ports" enabled:

- allow UDP packets with any SPORT to 4789 DPORT

- allow UDP packets with 4789 SPORT to any DPORT

For the record, I don't really like either of these.

The first one because it creates 2 contracts for what logically should be a single bidirectional construct, making it more difficult for others to troubleshoot and understand the logic.

The second one is basically trying to be clever about what the "Reverse Filter Ports" option does... but adding 4 rules (2 explicit, 2 derived). It does use only 1 contract though, so it gains some points in the clarity department.

The conclusion

In the end, it is really an exercise in understanding how a protocol actually functions - without that knowledge it's very difficult to make things work predictably with any vendor implementation out there (or in this case traffic filtering policy). Understanding the "why" is a key prerequisite to finding good solutions.

And, as always, thanks for reading.